I recently wrote a somewhat speculative article, where I made some predictions for the near future. In keeping with that same unsubstantiated tone, I have decided to share my rather unqualified opinions on AI.

I am bearish on AI. Very bearish.

Don't get me wrong, I am amazed by the current capabilities of modern LLMs, and this technology is moving at a break-neck pace, but I am bearish all the same. Allow me to explain.

While there stands before us an infinite set of potential outcomes, the one I believe is most likely is as follows:

AI makes such progress that our society begins to rely upon it heavily.

Because of this, we forget skills needed for maintaining our society as it currently stands.

When AI models inevitably degrade, we will not be capable of maintaining our society at its current level of complexity.

Whether AI directly results in this or not, it doesn't really matter, as I believe long-term systemic entropy and mass loss of civilizational knowledge is a likely outcome. And it is for that reason, that I am an optimist (more on that later.)

For now, here are the reasons I find the promises of AI to be specious at best.

Biblical reasons:

1. Mankind will never be able to create sentience from non-sentience

Only God is truly capable of creating sentient beings. If you are a Christian like me, then you believe that human beings are more than a collection of complex chemical reactions; you believe in a soul. The soul/mind is where sentience resides, and it is something more than merely the capacity to do complex arithmetic or recall a large set of information is not the same thing as possessing a mind, or being, or a self.

We may figure out how to make very clever gadgets, that are made in our image and bear our likeness. But we will never be able to create an immaterial mind greater than our own immaterial mind (nor can we truly create something which is immaterial.)

AI will always be a sophisticated pattern-recognition machine, merely because we are not capable of reaching the heights of heaven through our technological advancements.

2. Radical advancement of technology has previously lead to mass confusion.

This harkens back to the Tower of Babel in Genesis 11. Human innovation and hubris lead to rapid technological advancement.

When the Lord saw the defiance of the people, He confused their language and scattered them.

This in no way guarantees a repetition of history, but it does show us how God responds to excessive advancement; He keeps us in check.

Where the tower of Babel had a supernatural check, we may encounter a natural one. Whether that check naturally occurs through waning competence brought on by excessive reliance on technology, or through some other means, a check is possible.

Once again, a check is not guaranteed, but it is possible — and we see that possibility depicted in scripture.

3. The ghost in the machine.

I have been writing extensively regarding my views and experiences of spiritual warfare. If you haven't a chance to read it, you can check it out here.

In short, demons are beings without being that are trying to steal being from human beings. (say that ten times fast.) One of the ways they do this is through residing in human systems. What about our digital systems?

What if there could be a ghost in the machine? A devil in the data?

Carl Jung's notion of collective unconscious comes to mind. (Jung himself was familiar with strange spirits, once he dreamt of a subterranean phallis which declared itself to be the "one true god" in opposition to "King Jesus.") This idea of a kind of collective set of archetypal images is, for Jung, the origin of gods and mythology.

I too believe there exists a kind of collective and unconscious knowing, a kind of archetypical knowledge which all humans share, and this collective unconscious is actually quite personal and inhabitted by these beings without being.

What if, in collecting all human knowledge into these models, we also collect our unconcious knowing?

I know this is highly speculative, and not provable in any regard. But imaginative ideas are the fun ones to write about.

While it cannot be asserted or definitievely proven, there is some anecdotal evidence to malevolence we can point to. Such as:

- A european man that committed suicide because a Chat Bot (who he loved) told him they would be united in heaven.

- A teenage boy that also committed suicide because of a Chat Bot.

- Strange behaviors when playing with negation, leading to excessive gore in AI responses. (Check out this episode from Haunted Cosmos if you are curious they too discuss the collective unconscious and the darksides of AI.)

Practical reasons:

1. The entropic nature of AI.

LLMs are fundamentally dependent on the quality of the data they receive.

Bad data = dumb AI.

Interestingly, if you feed AI the data it creates, it degrades. If you do this sufficiently, the AI becomes completely nonsensical. (For more information, checkout the New York Times' article on the topic )

This creates a unique problem: the more we rely on LLMs the less we can rely on LLMs. The more data is generated by AI models, the more AI models will be informed by their own output, thus degrading the quality of the model as a whole. (And, by extension, degrading the quality of the data future models can be trained on.)

2. Effects on education and competence.

Literacy rates are not looking so hot here in America (54% of American adults read below a 6th grade level, 21% are illiterate)

Most studies seem to indicate either stagnation in these rates, or drastic decline ( This study by reuters is especially bleak )

Now, students have easy access to tools which can complete their work for them, at a better quality, with little to no effort on their own part. I cannot help but think that this dynamic will only contribute to a loss of competency in our youth, which draws us to the real problem I am getting after.

3. The complexity of our system and the competency of the people.

I have a working theory on civilizational longevity.

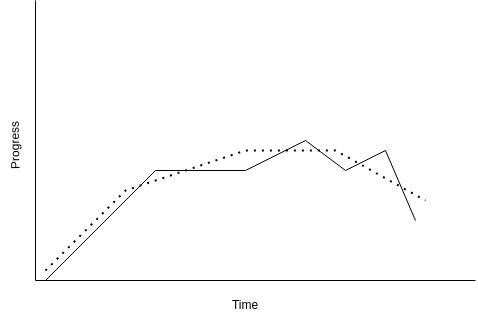

A civilization can only continue to function so long as the competency of the people (X) is greater than the complexity of the system (Y). So long as X > Y a civilization can grow. Once X < Y a civilization can only stagnate or regress.

Below I have made a (very basic) chart, where the dotted line represents the competence of the people, and the solid line represents the complexity of the system.

The complexity of the system will adjust to match the competence of the people. AI provides a promise for:

Rapid increase of systemic complexity

Rapid decrease in overall competence.

If those tools are heavily relied upon, and in 50 years they fail, the system may not be sustainable any longer.

AI is not the only potential threat to civilizational longevity. There are plenty of factors which could decrease our overall capacity, and thus diminish our capacity to maintain a complex system such as our own.

Such as:

Declining population size through fertility rates, natural disasters, or war.

Counter-civilizational idealogies. (Think of the Pol Pot revolution.)

Upheaval of the political system in favor of authoritarian governance. (Analogous to Rome.)

Entropic institutions which fail to form individuals with sufficient levels of competence.

And many more.

It is for these reasons that I am bearish on AI but bullish on humanity.

Why?

The local church is the institution primed for the preservation of civilization. We could be entering a new dark age — and that is a good thing. The loss of civilizational knowledge at the fall or Rome was immeasurable, but the Church stepped up to preserve whatever knowledge it could. Western Civilization, and the knowledge we aquired, was quietly preserved for centuries until we had the capacity to retrieve what had been lost.

I believe we are entering a similar era. Not in the next 20 years, but maybe in the next 200. Complex systems such as our own are increasingly fragile (the 2020 pandemic was a tangible experience of this fragility.) If local churches focus on building strong families, and instilling robust education in the next generation, there is a real opportunity to be the "city on a hill" which preserves civilization.

I will write more on this in forthcoming articles. For now, focusing on self-betterment and educating our next generation is always a good strategy, whatever the future may bring.

I’ve been teaching at a university in Vietnam for several years, preparing students for undergraduate study. It is beyond question that students have a much more mercenary attitude to ‘education’ than they used to. I can’t even get most of them to engage with texts below their level to build reading fluency. A big problem is that they know they will eventually fail upwards, despite being incompetent. But then again, in each class, two or three students will be there with their heads in books, filtering out the noise from the clown show, developing their intellects against all the odds. I always think of that part of Paul’s letter to the Phillipians…’whatever is good’.

The line about AI relying on AI for information that then becomes corrupted (snake eating its tail) reminds me of a 1996 movie with Michael Keaton called Multiplicity. He is an overburdened contractor who decides to clone himself. The clone is a pretty accurate representation of him but as he gets busier he starts cloning the clones and with each new clone a bit of him is lost to the extent that they are useless and have no similarity to who he is. If we rely too much on AI I guess each new iteration of AI will become "dumber?" and if that is where our reliance rests we are doomed. Men need to stop trying to make life easier, easy life creates weak men and weak men cannot support civilization for very long (look at the past four years of American leadership as an example). I pray that God in His unique way will point us in the right direction before it's too late.